OpenVAS known as Open Vulnerability Assessment System is the open source vulnerability suite to run the test against servers for known vulnerabilities using the database (Network Vulnerability Tests), OpenVAS is a free software, its components are licensed under GNU General Public License (GNU GPL). Here is the small guide to setup the OpenVAS on CentOS 7 / RHEL 7.

OpenVAS known as Open Vulnerability Assessment System is the open source vulnerability suite to run the test against servers for known vulnerabilities using the database (Network Vulnerability Tests), OpenVAS is a free software, its components are licensed under GNU General Public License (GNU GPL). Here is the small guide to setup the OpenVAS on CentOS 7 / RHEL 7.

Setup Repository:

Issue the following command in the terminal to install atomic repo.

# wget -q -O - http://www.atomicorp.com/installers/atomic |sh |

Accept the license Agreement.

PROVIDED BY ATOMICORP LIMITED YOU ACKNOWLEDGE AND AGREE:THIS SOFTWARE AND ALL SOFTWARE PROVIDED IN THIS REPOSITORY IS

PROVIDED BY ATOMICORP LIMITED AS IS, IS UNSUPPORTED AND ANY

EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR

PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL ATOMICORP LIMITED, THE

COPYRIGHT OWNER OR ANY CONTRIBUTOR TO ANY AND ALL SOFTWARE PROVIDED

BY OR PUBLISHED IN THIS REPOSITORY BE LIABLE FOR ANY DIRECT,

INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES

(INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS

OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION)

HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT,

STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE)

ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED

OF THE POSSIBILITY OF SUCH DAMAGE.====================================================================

THIS SOFTWARE IS UNSUPPORTED. IF YOU REQUIRE SUPPORTED SOFWARE

PLEASE SEE THE URL BELOW TO PURCHASE A NUCLEUS LICENSE AND DO NOT

PROCEED WITH INSTALLING THIS PACKAGE.

====================================================================For supported software packages please purchase a Nucleus license:

https://www.atomicorp.com/products/nucleus.html

All atomic repository rpms are UNSUPPORTED.

Do you agree to these terms? (yes/no) [Default: yes] yesConfiguring the [atomic] yum archive for this systemInstalling the Atomic GPG key: OK

Downloading atomic-release-1.0-19.el7.art.noarch.rpm: OKThe Atomic Rocket Turtle archive has now been installed and configured for your system

The following channels are available:

atomic – [ACTIVATED] – contains the stable tree of ART packages

atomic-testing – [DISABLED] – contains the testing tree of ART packages

atomic-bleeding – [DISABLED] – contains the development tree of ART packages

System Repo (Only for RHEL):

OpenVAS installation requires additional packages to be downloaded from internet, if your system does not have Redhat subscription you need to setup the CentOS repository.

# vi /etc/yum.repos.d/centos.repo |

Add the following lines.

[CentOS] name=centos baseurl=http://mirror.centos.org/centos/7/os/x86_64/ enabled=1 gpgcheck=0 |

PS: CentOS machines do not requires the above repo setup, system automatically creates it during the installation.

Install & Setup OpenVAS:

Issue the following command to install OpenVAS.

# yum -y install openvas |

It will do the package installation.

texlive-tipa noarch 2:svn29349.1.3-32.el7 base 2.8 M texlive-tools noarch 2:svn26263.0-32.el7 base 61 k texlive-underscore noarch 2:svn18261.0-32.el7 base 21 k texlive-unicode-math noarch 2:svn29413.0.7d-32.el7 base 60 k texlive-url noarch 2:svn16864.3.2-32.el7 base 25 k texlive-varwidth noarch 2:svn24104.0.92-32.el7 base 20 k texlive-xcolor noarch 2:svn15878.2.11-32.el7 base 34 k texlive-xkeyval noarch 2:svn27995.2.6a-32.el7 base 26 k texlive-xunicode noarch 2:svn23897.0.981-32.el7 base 43 k unzip x86_64 6.0-13.el7 base 165 k wapiti noarch 2.3.0-5.el7.art atomic 290 k which x86_64 2.20-7.el7 base 41 k wmi x86_64 1.3.14-4.el7.art atomic 7.7 M zip x86_64 3.0-10.el7 base 260 k zziplib x86_64 0.13.62-5.el7 base 81 k Transaction Summary ======================================================================================================================================================================== Install 1 Package (+262 Dependent packages) Total download size: 84 M Installed size: 280 M Is this ok [y/d/N]: y (1/263): bzip2-1.0.6-12.el7.x86_64.rpm | 52 kB 00:00:00 warning: /var/cache/yum/x86_64/7/atomic/packages/alien-8.90-2.el7.art.noarch.rpm: Header V3 RSA/SHA1 Signature, key ID 4520afa9: NOKEY Public key for alien-8.90-2.el7.art.noarch.rpm is not installed (2/263): alien-8.90-2.el7.art.noarch.rpm | 90 kB 00:00:00 (3/263): automake-1.13.4-3.el7.noarch.rpm | 679 kB 00:00:00 (4/263): autoconf-2.69-11.el7.noarch.rpm | 701 kB 00:00:00 (5/263): debconf-1.5.52-2.el7.art.noarch.rpm | 186 kB 00:00:00 (6/263): dirb-221-2.el7.art.x86_64.rpm | 46 kB 00:00:00 (7/263): dpkg-perl-1.16.15-1.el7.art.noarch.rpm | 292 kB 00:00:00 (8/263): debhelper-9.20140228-1.el7.art.noarch.rpm | 750 kB 00:00:00 (9/263): doxygen-1.8.5-3.el7.x86_64.rpm | 3.6 MB 00:00:00 (10/263): dpkg-1.16.15-1.el7.art.x86_64.rpm | 1.2 MB 00:00:00 texlive-tetex-bin.noarch 2:svn27344.0-32.20130427_r30134.el7 texlive-thumbpdf.noarch 2:svn26689.3.15-32.el7 texlive-thumbpdf-bin.noarch 2:svn6898.0-32.20130427_r30134.el7 texlive-tipa.noarch 2:svn29349.1.3-32.el7 texlive-tools.noarch 2:svn26263.0-32.el7 texlive-underscore.noarch 2:svn18261.0-32.el7 texlive-unicode-math.noarch 2:svn29413.0.7d-32.el7 texlive-url.noarch 2:svn16864.3.2-32.el7 texlive-varwidth.noarch 2:svn24104.0.92-32.el7 texlive-xcolor.noarch 2:svn15878.2.11-32.el7 texlive-xkeyval.noarch 2:svn27995.2.6a-32.el7 texlive-xunicode.noarch 2:svn23897.0.981-32.el7 unzip.x86_64 0:6.0-13.el7 wapiti.noarch 0:2.3.0-5.el7.art which.x86_64 0:2.20-7.el7 wmi.x86_64 0:1.3.14-4.el7.art zip.x86_64 0:3.0-10.el7 zziplib.x86_64 0:0.13.62-5.el7 Complete! |

Once the installation is completed, start the OpenVAS setup.

# openvas-setup |

Setup will start to download the latest database from internet, Upon completion, setup would ask you to configure listening ip address.

Step 2: Configure GSAD The Greenbone Security Assistant is a Web Based front end for managing scans. By default it is configured to only allow connections from localhost. Allow connections from any IP? [Default: yes] Restarting gsad (via systemctl): [ OK ] |

Configure admin user.

Step 3: Choose the GSAD admin users password. The admin user is used to configure accounts, Update NVT's manually, and manage roles. Enter administrator username [Default: admin] : admin Enter Administrator Password: Verify Administrator Password: |

Once completed, you would see the following message.

https://<IP>:9392

Disable Iptables.

# systemctl stop iptables.service |

Create Certificate for OpenVAS manager.

# openvas-mkcert-client -n om -i |

You do not require to enter any information, it will automatically creates for you.

…………………..++++++

………………………..++++++

e is 65537 (0x10001)

You are about to be asked to enter information that will be incorporated

into your certificate request.

What you are about to enter is what is called a Distinguished Name or a DN.

There are quite a few fields but you can leave some blank

For some fields there will be a default value,

If you enter ‘.’, the field will be left blank.

—–

Country Name (2 letter code) [DE]:State or Province Name (full name) [Some-State]:Locality Name (eg, city) []:Organization Name (eg, company) [Internet Widgits Pty Ltd]:Organizational Unit Name (eg, section) []:Common Name (eg, your name or your server’s hostname) []:Email Address []:Using configuration from /tmp/openvas-mkcert-client.2827/stdC.cnf

Check that the request matches the signature

Signature ok

The Subject’s Distinguished Name is as follows

countryName :PRINTABLE:’DE’

localityName :PRINTABLE:’Berlin’

commonName :PRINTABLE:’om’

Certificate is to be certified until Aug 5 19:43:32 2015 GMT (365 days)Write out database with 1 new entries

Data Base Updated

Your client certificates are in /tmp/openvas-mkcert-client.2827 .You will have to copy them by hand.

Now Rebuild the OpenVAS database (If required)

# openvasmd --rebuild |

Once Completed, Start the OpenVAS manager.

# openvasmd |

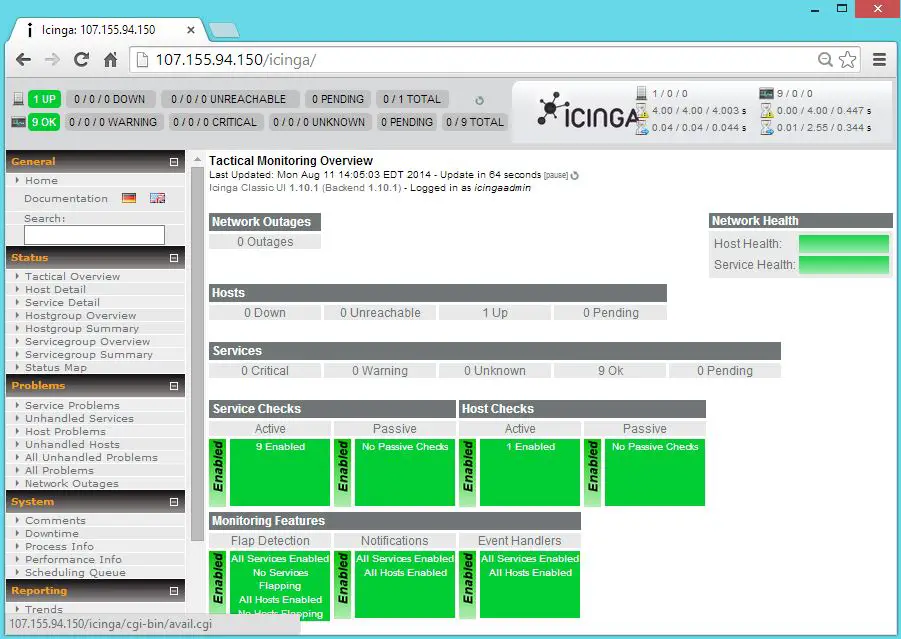

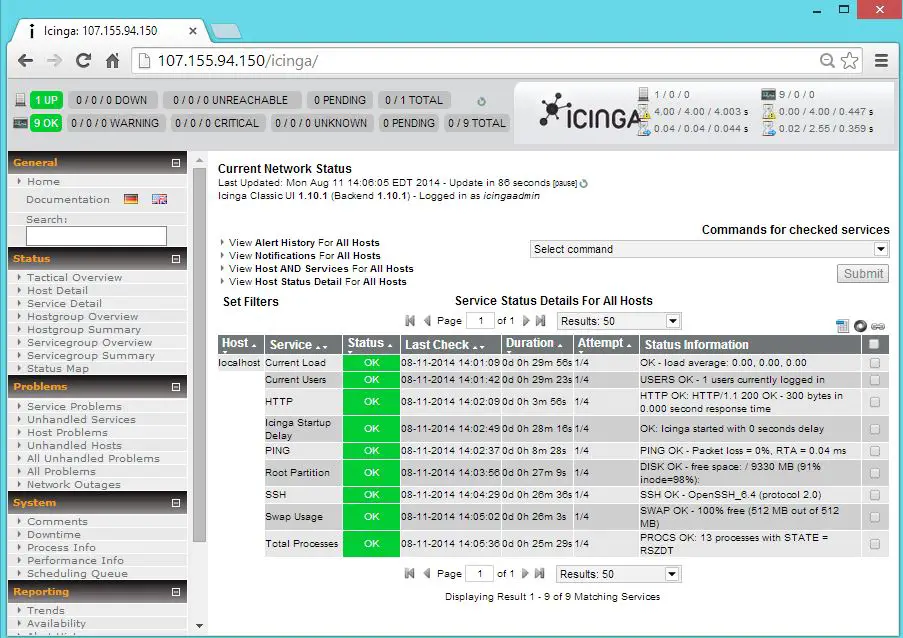

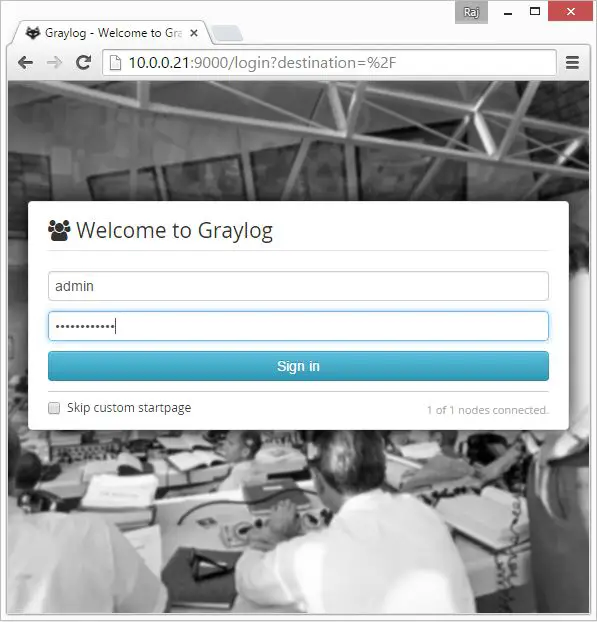

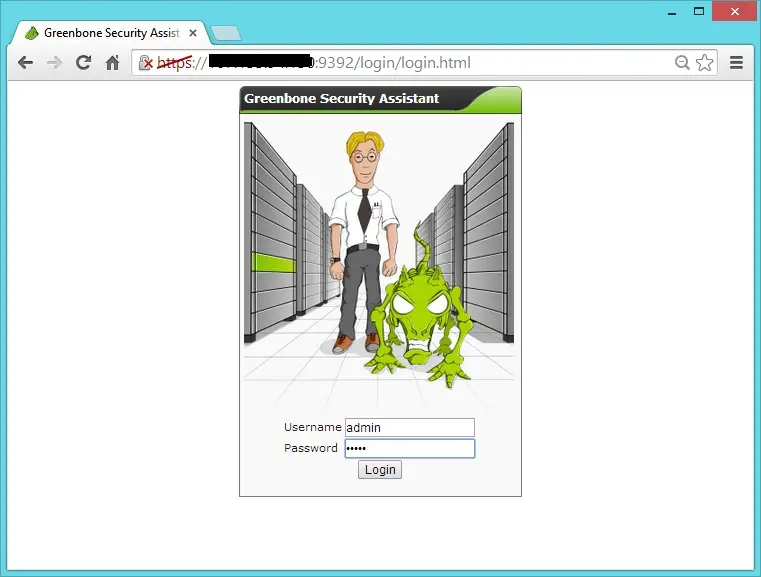

Open your browser and point to https://your-ip-address:9392. Login as admin using the password created by you.

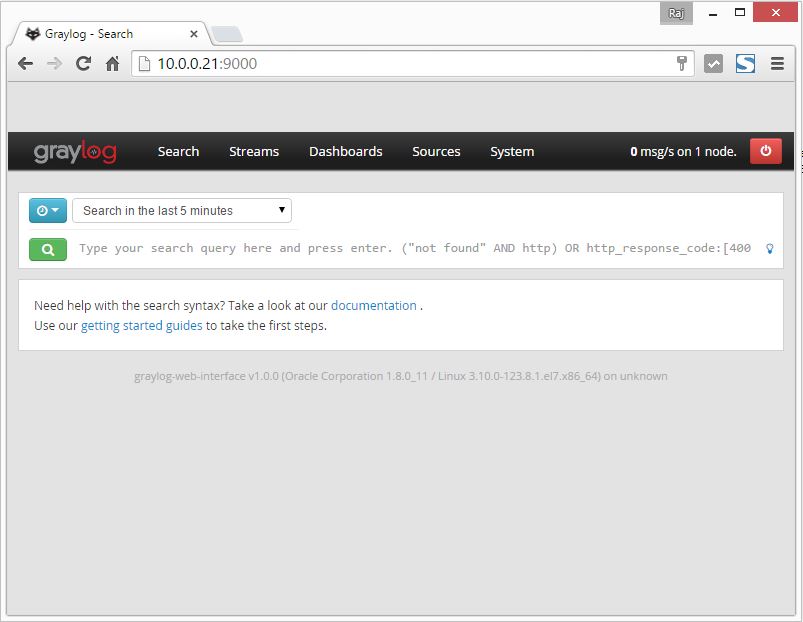

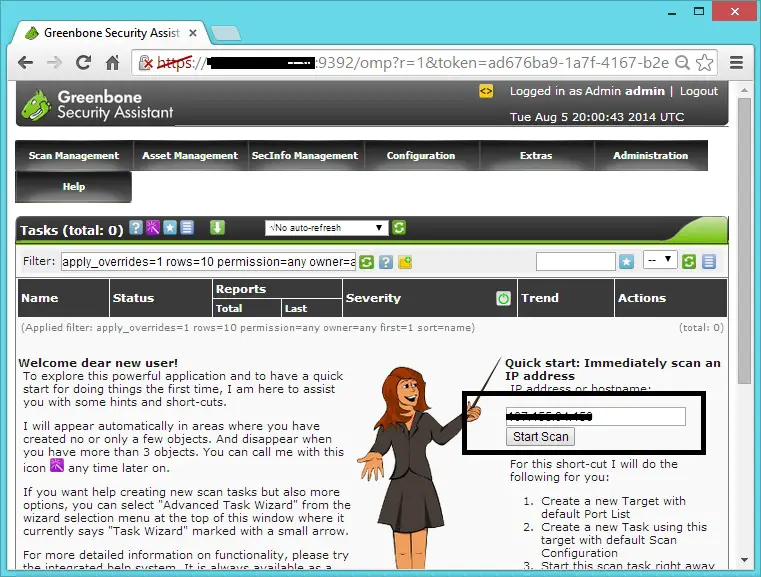

You can start the quick scan by entering ip address in the quick scan field.

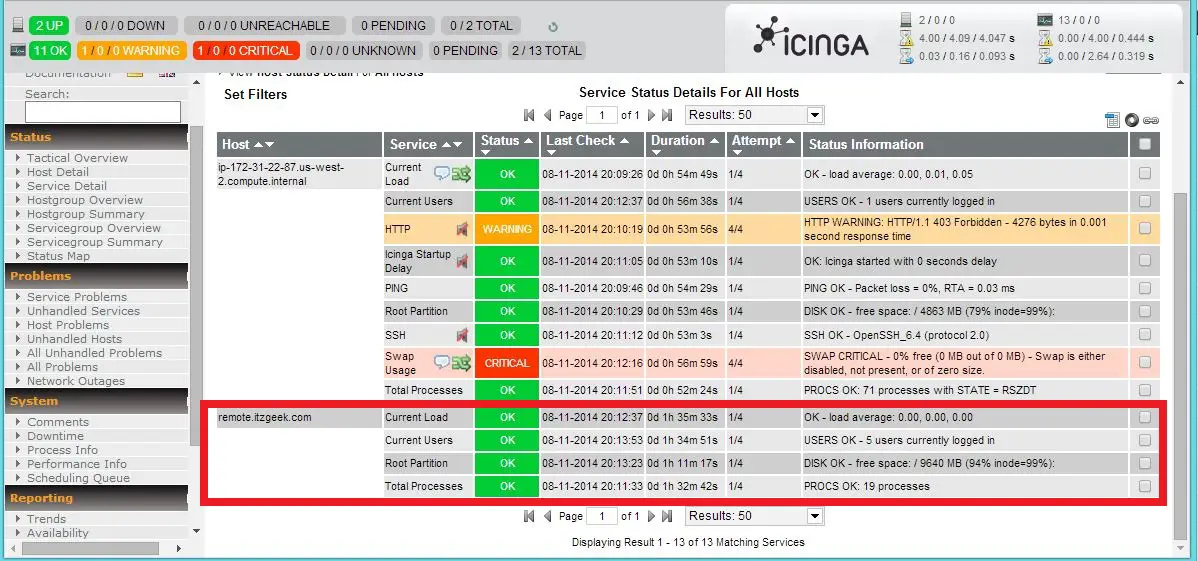

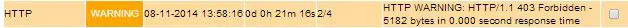

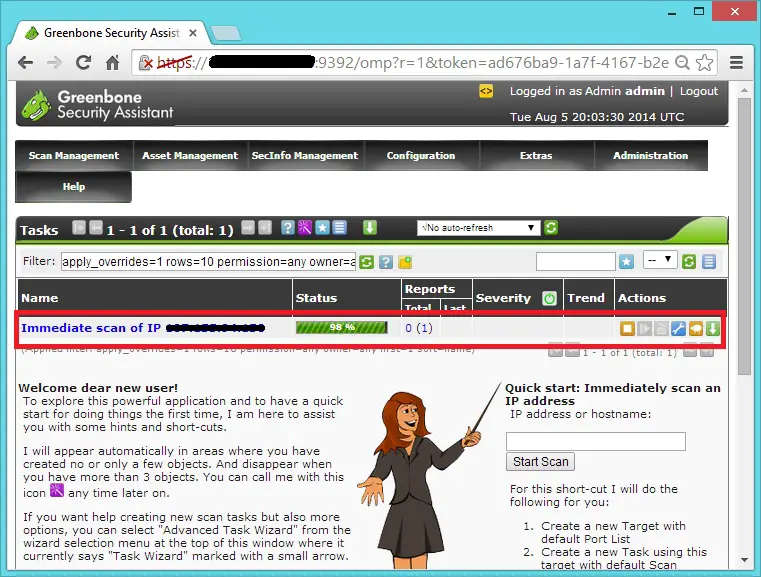

After that you would the see immediate task like below. currently 98% scanning is completed

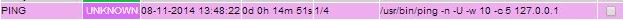

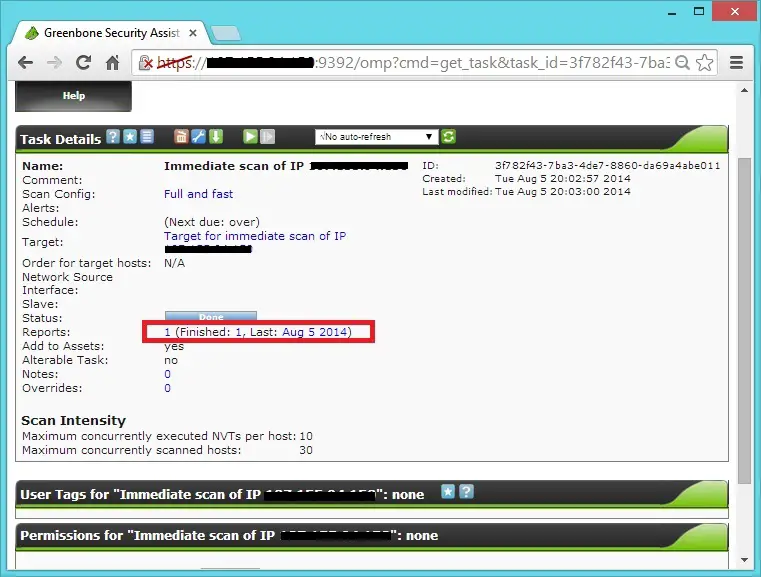

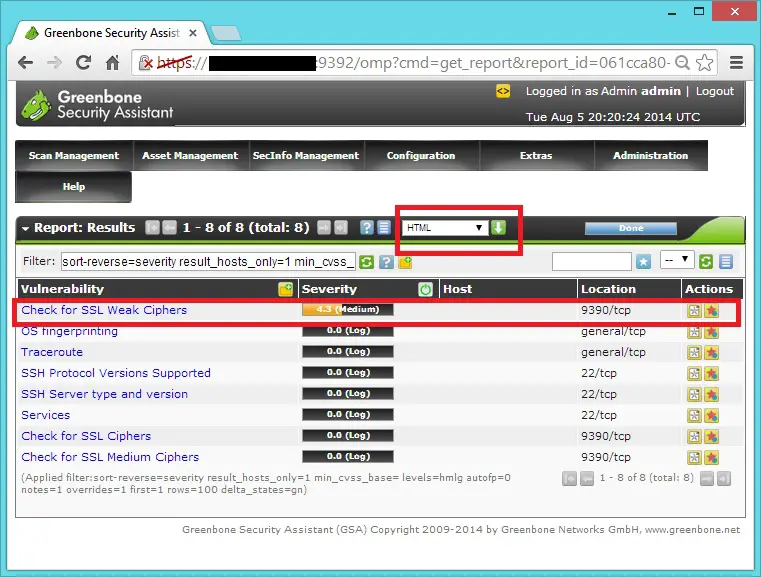

Click on the task to view the details of the scan, details will be like below. Once the scan is completed, click on “Date” to see the report.

In report page you have option to download the report in multiple format like pdf, html,xml, etc,.. or you can click on the each Vulnerability to see the full information.

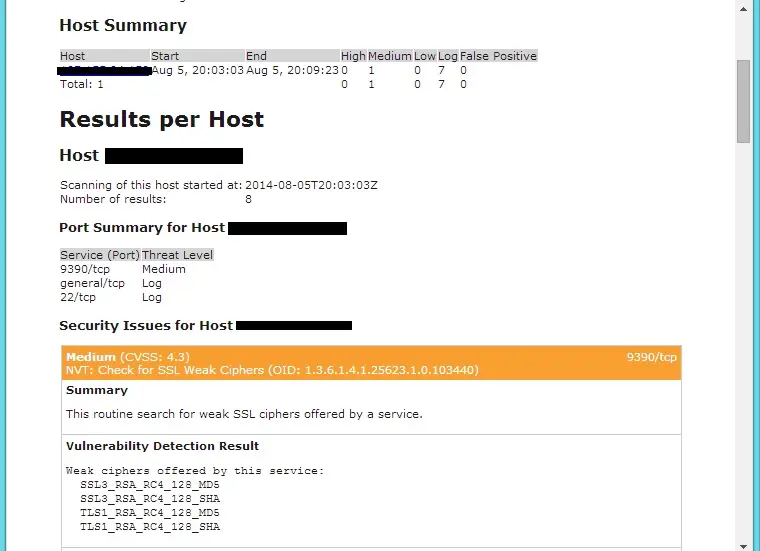

Actual report will look like below.

That’s All, Place your valuable comments below.